IDZ Interview

Intel Developer Zone Interview

Update 12th Dec 2017: this post is now published at Intel Developer Zone

Tell us about your background.

Professionally I’ve been a technologist for a large global investment bank, an analytics consultant for a major UK commercial and private bank, a full-stack developer for a wellness startup, and briefly an engineering intern for an airline. In my spare time I am the creator and author of Mathalope.co.uk (a tech blog visited by 120,000+ students and professionals from 180+ countries so far), volunteer developer of the Friends of Russia Dock Woodland Website, open source software contributor, hackathon competitor, and part of the Intel Software Innovator Program. I am currently working on becoming a better machine learning engineer and educator.

What got you started in technology?

When I was studying masters in aeronautical engineering at Imperial College London, I learnt to write small Fortran/Matlab programs where you could throw at it say satellite data, and it spit out geographical location on Earth. I then started my professional technology career in 2008 for a global investment bank, where I collaborated with colleagues from all 4 regions globally (EMEA, ASPAC, NAM and LATAM), developed and rolled-out a fully automated capacity planning and analytics tool, along with governance and processes - protecting the 100,000+ production systems (including Windows, UNIX, AIX, VM, and Mainframe platforms) from risk of overloading. I built the system with proprietary technologies such as SAS, Oracle, Autosys batch scheuling, Windows/UNIX scripts, and internal configuration databases. In 2014 I decided to learn about open source technologies in my spare time and as a result created Mathalope.co.uk. Since then I’ve learnt to program in more than 10 languages, and my current favorites are Python and JavaScripts due to their expressiveness, syntax, and relevance to building modern applications. You can check out my Github contributions here.

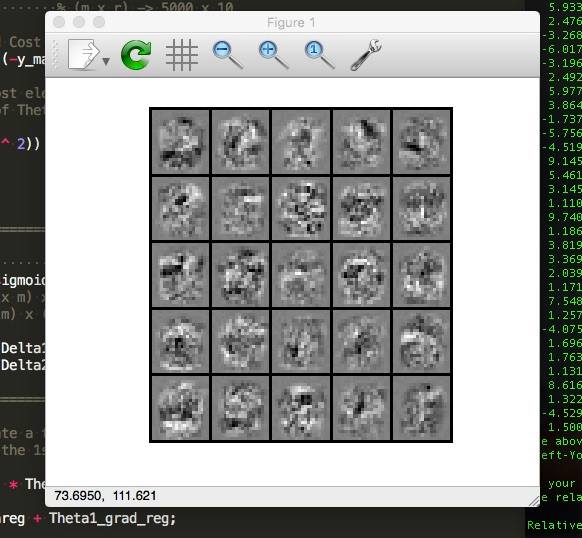

Since a year ago I also taught myself machine learning and parallel distributed computing with the help of deeplearning.ai, Stanford Machine learning courses, Karpathy’s Stanford cs231n Convolutional Nerual Networks for Visual Recognition, Colfax High Performance Computing Deep-dive series, and many other deep learning books and courses online.

What projects are you working on now?

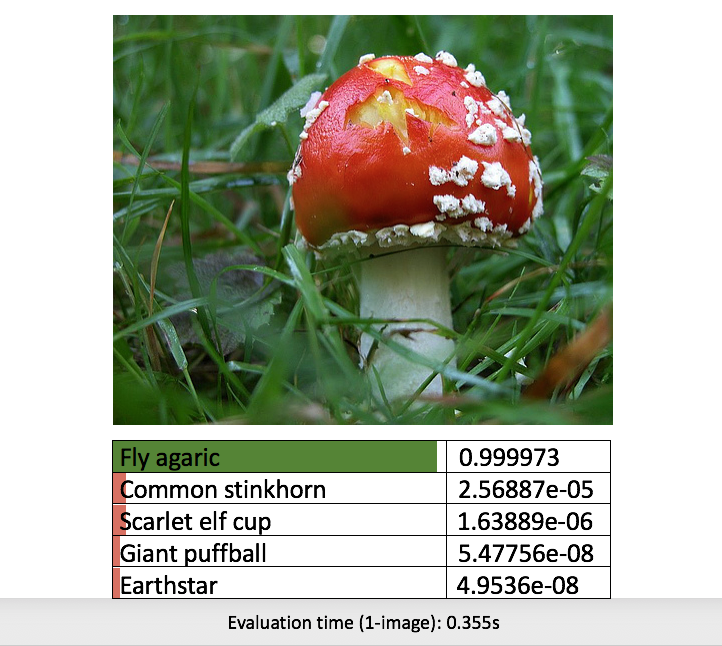

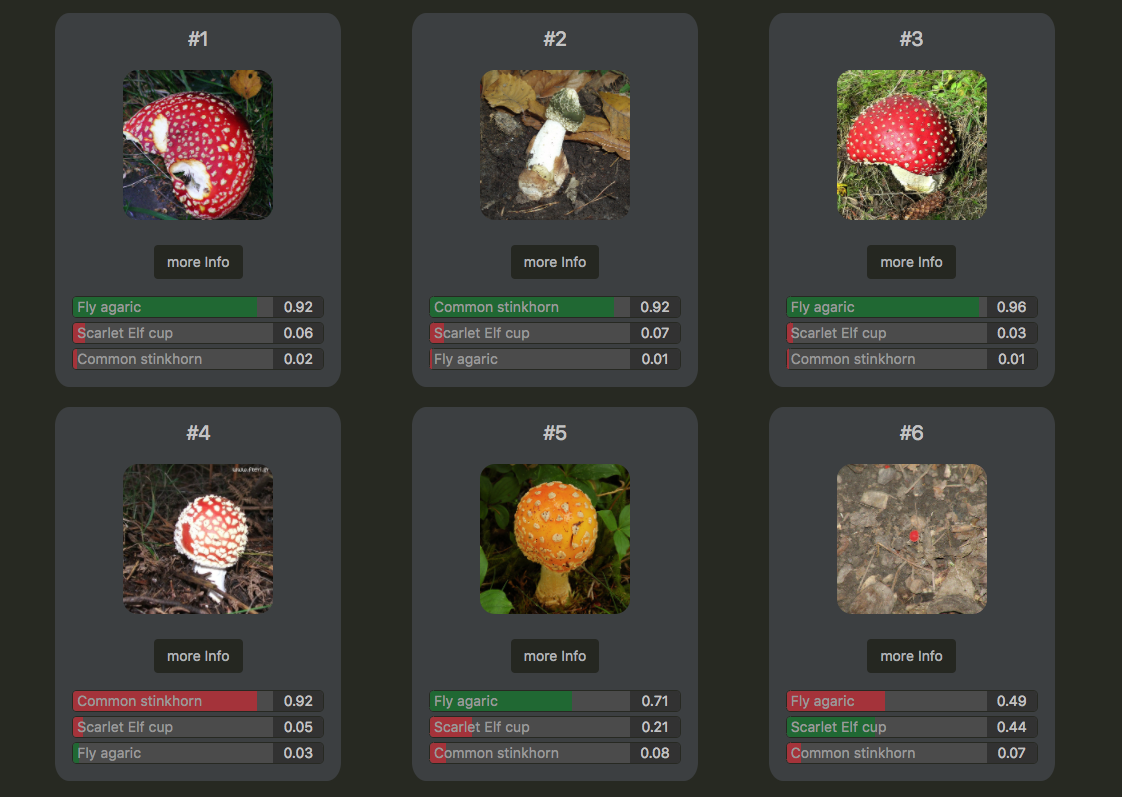

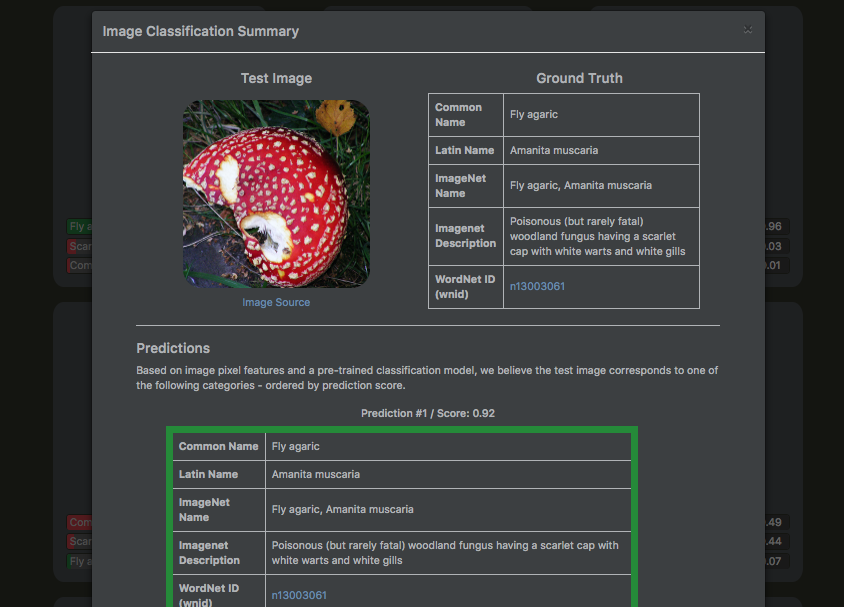

I am currently building fungAI.org - a machine learning application with the aim of identifying wild mushroom species from images using deep learning techniques. The project was primarily inspired and motivated by a casual friend’s Facebook post from a walking trip:

“hey do you know what mushroom this is?”

Coincidentally my partner who is a conservationist happens also to be a mushroom enthusiast and so naturally we’ve formed a couple’s team. We think the project will be fun and educational.

You can read more about the project concept, try out an initial ReactJS frontend toy demo, and check out this Intel DevMesh Fungi Barbarian Project page. All project source codes are open sourced on GitHub - you may find more Demos and Github repository links here.

Tell us about a technology challenge you’ve had to overcome in a project.

During the summer of 2015 I spent the entire weekend just trying to get OpenCV-Python, Windows, and the Anaconda package manager to work together for a personal computer vision project. I remember searching really hard on the internet for solutions, trying out many of them, and failed uncountable times. After many rounds of trial-and-errors and investigations I eventually solved the problem by combining multiple “partially working” solutions. In the end I decided to write an article summarising my solution via a blog post which has since been viewed more than 120,000 times. To increase the range of impact I also posted it as a solution to a Stackoverflow Forum - the forum has so far been viewed over 200,000 times and my solution has received 50+ “good citizen brownie points” upvotes. It turns out many developers around the world had also bumped into similar issues at the time and got the problem solved with the help of the articles.

This experience has taught me an important lesson on making an impact: it doesn’t have to be building the next Google or Facebook - all it requires could be as simple as writing up a summary of how you’ve solved a problem and sharing it online. We only get to live once.

What trends do you see happening in technology in the near future?

A recent talk presented by O’Reilly and Intel Nervana in September 2017: AI is the New Electricity by Andrew Ng discussed the trends and value creation of machine learning. This is my one-liner summary taken from Andrew:

Today, vast majority of values across industry is created by Supervised Learning, and closely followed by Transfer Learning

Personally, I am super excited about transfer learning and believe this technique will be used a lot in solving many specialized problems. Say we wish to train a model to recognize different types of flowers for instance. Instead of having to spend months training a model from scratch with millions of flower images, we can take a very massive short-cut: take a pre-trained model like Inception v3 that is already very good at regconising objects from ImageNet data, use it as a starting point and train that more specialized flower recognition model from there. The end result? You only require about 200 images per flower category, and the training of a new model would take only about 30 minutes on a modern laptop on CPU.

This suddenly makes deep learning very inclusive to everybody

An ultra powerful and expensive graphics processing unit (GPU) is no longer a “must have requirement” to solve deep learning problems. Transfer learning and open source software together have made deep learning more inclusive and accessible to all. The power of inclusiveness will enable stronger communities, knowledge sharing, and further technological advancement of deep learning in the near future.

How does Intel help you succeed?

Intel supports innovative projects, such as fungAI.org that I’m currently working on, by providing access to state-of-the-art deep learning technologies: Intel Xeon-Phi enabled cluster nodes for model training, Intel Movidius Neural Compute Stick for embedded machine learning applications, and more. At a personal level, Intel has provided me access to a community of technology experts and innovators from artificial intelligence (AI), Internet of Things (IoT), virtual reality (VR), and Game Development - where I get to learn and be inspired from. Recently I was sponsored by Intel to take part in events including the Seattle Intel Software Innovator Summit 2016 and Nuremberg Embedded World Expo 2017, where I had the opportunity to travel, learn, and contribute to the tech community. I really appreciate the amount of efforts the Intel Software Innovator Program team has put together in enabling long-term success of the innovation community. It has been a privilege and I thank you all for the opportunities.

Outside of technology, what type of hobbies do you enjoy?

Since 2009 I’ve been playing social mixed-gender non-contact touch rugby and tag rugby leagues here in London. It’s a fun way to socialize and meet new friends in an active way. I would highly recommend this social sport to anybody.